Earlier this year, a robocall in New Hampshire sounded like the voice of President Joe Biden.1 The call was made to thousands of voters, urging them not to participate in the upcoming primary elections. The deepfake audio was created by a New Orleans magician, a task which only took him 20 minutes using artificial intelligence (AI) software.2

Deepfake technology has gained recent attention as it continues to cause problems for individuals and organizations alike. One of those targeted has been pop star Taylor Swift, causing celebrities, politicians, technology experts, and others to demand stricter laws on their use.3

But what is deepfake technology, and how can you recognize it?

What is deepfake, explained

Deepfakes are hyper-realistic digital manipulations of audio, image, or video content that make it appear as though people are saying or doing things that never actually took place. The term "deepfake" is a combination of "deep learning" and "fake," reflecting the AI techniques that drive the creation of these falsified videos and audio recordings. Deepfakes are becoming more prevalent throughout all industries, as the number of deepfakes detected since 2022 increased tenfold.4

Creating deepfakes is simple, and the technology to create is readily available. The creator uses a process known as a Generative Adversarial Network (GAN). GAN uses two competing neural networks: one is called the generator; the other is the discriminator. The generator produces the fake images or sounds, while the discriminator works to detect which are real and which are fake. These competing processes train each other to get better. Because of this machine learning (ML), the generator improves at creating realistic fakes as the discriminator gets better at detecting them. Eventually, the generator becomes very good at producing realistic content, making the deepfake content believable to viewers.5

For example, consider the photo below. The creator used a combination of visual effects and editing software to make the subject look almost identical to Tom Cruise. Although most people were able to detect that the face swapping was fake, these images went viral as a warning that deepfakes are continuing to improve in quality and prevalence.6

This is just one of many examples of deepfakes gaining more widespread attention. Awareness and understanding will be key as the technology continues to advance.

Deepfake technology in the cybersecurity threat landscape

Not all deepfake technology is malicious – it does have legitimate uses, especially in arts and entertainment. For example, in the Disney series Star Wars Mandalorian, deepfake is used to show a younger Luke Skywalker, played by Mark Hamill who is now in his 60s.7 In addition to theater, the technology is also used in video gaming, education, art, and even restoration of damaged media content.

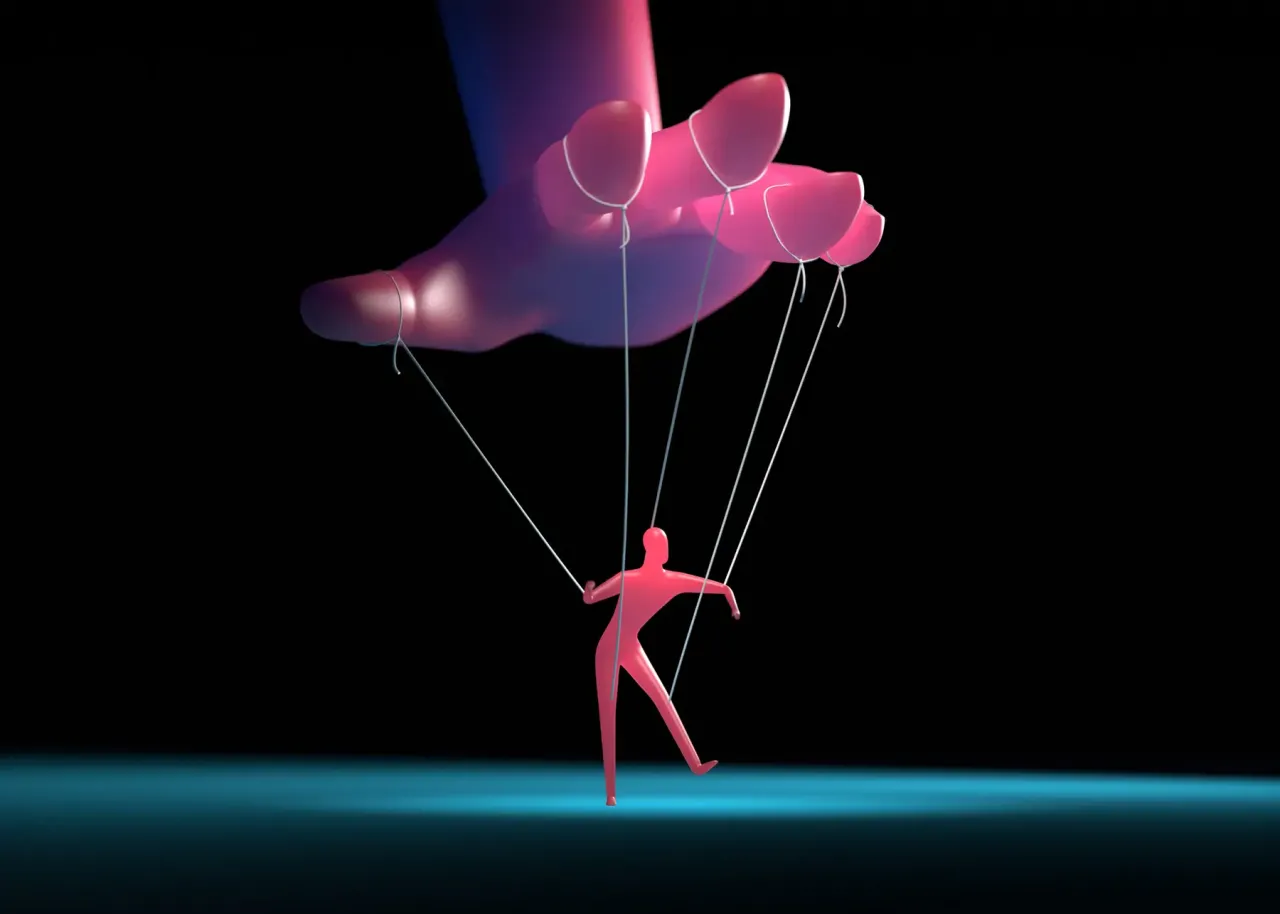

However, there are uses of deepfake technology that can be dangerous. Deepfakes raise significant ethical concerns, including their potential use in misinformation campaigns, political manipulation, creating fake news and compromising content, and fraud. They can undermine trust in digital media and have serious implications for privacy, security, and democracy. The deepfake of President Biden’s voice before the New Hampshire primary is an example of how it could be used to manipulate an election.

Another example is a woman in Hong Kong who recently paid HK$200 million of her firm’s money to threat actors in a deepfake video conference call. Someone posed as a senior officer of the company and requested to transfer money to designated bank accounts – the woman, unable to identify the deepfake, acted accordingly.8 As threat actors and malicious users continue to get more sophisticated, it’s important to remain vigilant in identifying and protecting against deepfake technology.

Stay aware of deepfake technology

The technology behind AI and deepfakes is advancing rapidly. And even though, as stated above, there are legitimate and beneficial uses, it is their malicious use that has caused global concerns. Several countries such as China, South Korea, the United Kingdom, Japan, and India have implemented, or are considering, AI regulation laws.9

While there are no federal laws in the US at the time of this article, at least 10 states have legislation that targets the creators and those who share explicit deepfake content. There is also increasing concern about how it could be used in election interference.10 Many technology companies are being called to address AI misinformation.11

How to recognize deepfake AI technology

Even with global and national efforts in play to reign in malicious use, there are measures that can be taken to help identify deepfakes and mitigate the risks associated with them. Here are five common ways a user could identify deepfake technology:12

Facial distortion

Most deepfakes transpose someone’s face on another body. Although some high-quality deepfakes are very convincing, full reproduction of skin and movement of eyes, noses, and mouths can be challenging to perfect. They also may not accurately reflect how light and shadows will reflect on the face.

Hands

Hands are challenging for artists to recreate, and AI technology shares the same struggles. Some AI-developed content has hands with missing fingers, extra digits, misaligned joints, or trouble grabbing or holding objects.

Audiovisual mismatch

Look for any glitches in synchronization between audio and video. For example, lip movement may not match spoken words. There may be unintelligible background noises caused by audio filters.

Check the source

Compare the video with known source material involving the scene or images of the person in the deepfake. Look for discrepancies. You may even be able to check the metadata of the image.

AI image detectors

There are applications or websites that can assist such as Everypixel, Aesthetics, Hugging Face, Optic AI or Not, Illuminarty, and Hivemoderation. These applications take advantage of GAN and have been reporting up to 99% accuracy in identifying deepfake images.

In addition to the above, more articles are coming out with recommendations on how to better identify deepfakes. Organizations should look to educate their users early and often about these warning signs and update them as the technology becomes more sophisticated.

On a larger scale, companies can identify deepfakes through a combination of technology solutions, organizational policies, and education. Here are a few strategies to consider adopting:

User education and training

Educate employees about the existence and risks of deepfakes, and teach them how to recognize and report suspicious content. Provide them with the means to recognize deepfake media. Sometimes it comes down to allowing employees to trust their instincts. Plan and rehearse responses to potential cybersecurity threats caused by deepfakes.

Governance and policies

Establish clear policies for how to handle deepfake incidents. Include response strategies and communication plans developed in the same way you would handle any potentially fraudulent activity. For example, implement a verification process like the one we explore below.

Internal controls and verification

Implement strong internal security protocols to prevent unauthorized access to company tools and data. Ensure you have a strong verification network where you can validate the authenticity of your people and content, especially in real-time when possible.

Metadata examination

In unaltered content, metadata will remain intact. Stripped metadata may indicate manipulation, but further investigation will be necessary using metadata tools. Google has recently implemented a new policy for their merchant center to ensure all AI-generated images are labeled as such in the metatags.13

Digital watermarking

In some cases, digital watermarking is used to embed a unique code into authentic content, which can be checked to verify the content’s identity.

Invest in detection technology

There are several deepfake detection software tools available to scan and flag potential deepfakes. These tools can spot inconsistencies or anomalies in audio, image, and video files. Keep these detection models updated to keep pace with the evolving sophistication of deepfake technology.

By adopting a multi-faceted approach that includes technological defenses, policy initiatives, and collaborative efforts, companies can better position themselves to detect and address the risks from malicious deepfakes, thereby protecting their brand integrity and the public's trust.

Staying ahead of deepfake technology

While deepfakes are raising concerns for individuals and companies alike, there are ways to stay aware and vigilant. As with most cybersecurity strategies, protecting yourself from malicious deepfakes requires an approach that combines people, processes, and technologies. With a robust user education system and smart policies in place, you’ll be better equipped to handle this growing, increasingly sophisticated threat.

At CAI, we take a proactive approach to preventing cybersecurity threats before they happen. We work alongside our clients to evaluate their current cybersecurity environment, then assist in assessment and management inclusive of threat detection, response, and remediation services. If you’re interested in learning more about deepfakes or strengthening your cybersecurity posture, contact us.

Endnotes

- The Associated Press. “Ai-Generated Robocall Impersonates Biden in an Apparent Attempt to Suppress Votes in New Hampshire.” Newsday, January 23, 2024. https://www.newsday.com/news/nation/New-Hampshire-primary-Biden-AI-deepfake-robocall-k99268. ↩

- MSN, February 23, 2024. https://www.msn.com/en-us/news/politics/magician-created-ai-generated-biden-robocall-in-new-hampshire-for-democratic-consultant-report-says/ar-BB1iM7zE. ↩

- “Taylor Swift Deepfakes: Over 400 Experts and Celebrities Demand Stricter Laws after Multiple Cases of AI-Generated Explicit Images Surface.” MSN, February 22, 2024. https://www.msn.com/en-us/news/technology/taylor-swift-deepfakes-over-400-experts-and-celebrities-demand-stricter-laws-after-multiple-cases-of-ai-generated-explicit-images-surface/ar-BB1iHSwb. ↩

- Sumsub. “Sumsub Research: Global Deepfake Incidents Surge Tenfold from 2022 to 2023.” Sumsub, November 28, 2023. https://sumsub.com/newsroom/sumsub-research-global-deepfake-incidents-surge-tenfold-from-2022-to-2023/. ↩

- Finger, Lutz. “Overview of How to Create Deepfakes - It’s Scarily Simple.” Forbes, February 20, 2024. https://www.forbes.com/sites/lutzfinger/2022/09/08/overview-of-how-to-create-deepfakesits-scarily-simple/?sh=365d50fa2bf1. ↩

- Britton, Bianca. “Deepfake videos of Tom Cruise went viral. Their creator hopes they boost awareness.” NBC, March 5, 2021. https://www.nbcnews.com/tech/tech-news/creator-viral-tom-cruise-deepfakes-speaks-rcna356. ↩

- Hunt, James. “Mandalorian’s Luke Skywalker without CGI: Mark Hamill, Deep Fake & Deaging.” ScreenRant, May 2, 2022. https://screenrant.com/mandalorian-luke-skywalker-mark-hamill-no-cgi-deepfake-look/. ↩

- “Company Worker in Hong Kong Pays out £20m in Deepfake Video Call Scam.” The Guardian, February 5, 2024. https://www.theguardian.com/world/2024/feb/05/hong-kong-company-deepfake-video-conference-call-scam. ↩

- “Map of Global AI Regulations - A New Era.” Fairly AI | Map of Global AI Regulations - A New Era. Accessed February 26, 2024. https://www.fairly.ai/blog/map-of-global-ai-regulations. ↩

- Mirza, Rehan. “How Ai-Generated Deepfakes Threaten the 2024 Election.” The Journalist’s Resource, February 17, 2024. https://journalistsresource.org/home/how-ai-deepfakes-threaten-the-2024-elections/. ↩

- Field, Hayden. “Microsoft, Google, Amazon and Tech Peers Sign Pact to Combat Election-Related Misinformation.” CNBC, February 17, 2024. https://www.cnbc.com/2024/02/16/tech-and-ai-companies-sign-accord-to-combat-election-related-deepfakes.html. ↩

- “Five Ways to Spot a Deepfake.” MSN. Accessed February 26, 2024. https://www.msn.com/en-us/news/technology/five-ways-to-spot-a-deepfake/ar-BB1ilTR6. ↩

- “Google Merchant Center Requires AI Generated Images to Be Labeled.” Search Engine Roundtable, February 20, 2024. https://www.seroundtable.com/google-merchant-center-requires-labels-ai-images-36922.html. ↩